The AI Amplifier: Supercharging Your Rock Music Creation in the Digital Age

Unleash your inner rockstar! Dive into how advanced computational tools are revolutionizing rock music creation, blending human passion with digital precision. Discover how to supercharge your riffs, find fresh ideas, and keep the raw soul of your music fiercely human, even as technology amplifies your creative power.

MUSIC-PRODUCTION

Rod Meier

7/7/202518 min read

Introduction: The New Sound Frontier – Rocking with AI

The music industry stands as a testament to constant evolution, where technological advancements perpetually redefine the boundaries of artistic expression. From the raw, unfiltered energy that characterized early rock and roll to the meticulously crafted and polished productions of today, innovation has remained an unwavering companion. Currently, the industry finds itself on the cusp of another profound transformation: the integration of Artificial Intelligence into the very fabric of music creation. This burgeoning synergy is not merely about replacing human artistry; rather, it represents a powerful opportunity to amplify creative potential, opening up entirely new avenues for expression and efficiency within the musical landscape.

The prevailing narrative surrounding AI in creative fields often conjures images of machines composing soulless tracks, devoid of genuine emotion. However, the reality unfolding is far more exhilarating. Artificial Intelligence is rapidly emerging as a formidable co-creator, an inspiring muse, and an indispensable assistant for musicians across genres, particularly within the dynamic and expressive realm of rock music. Imagine the liberation of overcoming writer's block with a simple command, generating intricate drum patterns in mere seconds, or exploring complex harmonies that previously seemed unimaginable. This is the compelling promise of AI in rock music: a future where the boundless ingenuity of human artistry and the precision of algorithmic capabilities converge to forge unforgettable anthems.

A significant shift in perception is currently underway regarding the role of AI in creative endeavors. Initially, many viewed AI as an existential threat to human artistry, fearing that it would diminish the unique "soul" inherent in human-made music. This apprehension often stemmed from the idea of full automation leading to a loss of emotional depth in artistic output. However, a growing understanding reveals that AI functions primarily as a sophisticated tool or collaborator, designed to augment human capabilities rather than displace them. This reframing of AI from a competitor to a creative partner is pivotal for its widespread adoption and for fostering continued innovation within the artistic community. The historical trajectory of music technology consistently demonstrates that new tools, once met with skepticism, ultimately expand human creative potential, leading to new forms of expression rather than limiting them.

Chapter 1: Echoes of Innovation – A Brief History of Music Technology

Music's journey has always been deeply intertwined with technological progress. Long before the advent of artificial intelligence, artists eagerly embraced novel tools to expand their sonic palettes and streamline their creative processes. This historical progression from purely acoustic instruments to the digital age laid the essential groundwork for the current AI revolution, illustrating a continuous human drive to innovate and experiment with sound.

The early 20th century witnessed the emergence of groundbreaking electronic instruments such as the Theremin and Ondes Martenot. However, it was Robert Moog's pioneering development of the Moog synthesizer in the 1960s that truly propelled electronic sound into the mainstream consciousness. Initially perceived as avant-garde and lacking commercial potential, synthesizers swiftly permeated popular music, becoming instrumental in defining the distinctive sounds of the 1970s and 1980s. Their unparalleled ability to generate previously unimaginable textures and timbres fundamentally reshaped musical expression. The initial skepticism surrounding synthesizers, later overcome by their widespread artistic adoption, mirrors the current discussions around AI's role in music, suggesting a pattern of technological integration that ultimately expands creative horizons.

Parallel to the rise of synthesizers, the technique of sampling began to revolutionize music production. Sampling, which involves reusing portions of sound recordings in new compositions, traces its conceptual roots back to the 1940s with the musique concrète movement. The introduction of digital samplers in the 1970s and 1980s, exemplified by instruments like the Fairlight CMI and the E-mu Emulator, brought this technique to a broader audience of musicians. These digital tools transformed genres such as hip-hop and electronic music, allowing artists to manipulate and integrate diverse sounds with unprecedented precision and ease. The early legal challenges faced by sampling, particularly regarding copyright, also foreshadow the complex intellectual property issues now confronting AI-generated content, highlighting a recurring tension between artistic innovation and established legal frameworks.

A significant leap forward in digital music communication arrived with the Musical Instrument Digital Interface, or MIDI. This groundbreaking protocol enabled seamless communication between various electronic musical instruments and software. MIDI transmits crucial data, including notes, tempo, dynamics, and effects, offering immense flexibility for creating intricate arrangements, layering diverse sounds, and controlling virtual instruments from a single source. The establishment of MIDI as a universal language for digital music was pivotal, as it provided the foundational infrastructure for complex digital arrangements and the control of virtual instruments, which are indispensable components of modern Digital Audio Workstations and, by extension, AI integration. MIDI's capacity to facilitate "unique and unexpected sound combinations" laid conceptual groundwork for the generative capabilities seen in contemporary AI music tools.

The culmination of these technological advancements manifested in the Digital Audio Workstation (DAW). These software platforms effectively replaced traditional tape-based recording studios, transforming the entire music production paradigm. DAWs rapidly became the central hub for multi-track recording, non-destructive editing, mixing, and mastering, making professional-level music production accessible to a far wider audience, including individuals setting up home studios. The evolution of DAWs represents a consolidation of previous technologies into a single, powerful software environment. This development significantly democratized access to advanced production techniques, making them widely available and setting the stage for the seamless integration of AI within these comprehensive platforms.

Each of these innovations—synthesizers, samplers, MIDI, and DAWs—was far more than a mere technical upgrade; each constituted a revolution in artistic expression. Synthesizers unlocked entirely new sonic textures, samplers enabled the recontextualization of existing sounds, MIDI facilitated complex and precise arrangements, and DAWs democratized the entire production process, bringing professional capabilities to the fingertips of individual creators. This consistent pattern demonstrates that technological advancements in music are not simply incremental improvements; they fundamentally redefine artistic possibilities, creative workflows, and even the very definition of a "musician" or "composer." The initial resistance or skepticism that often accompanies new technologies frequently gives way to their widespread adoption and integration into the artistic mainstream. This historical progression underscores that the "human element" in music is not displaced by technology but rather adapts and discovers new avenues for expression within an evolving technological landscape. This historical perspective provides a compelling argument for embracing AI in music, positioning it as the latest in a long lineage of transformative tools that expand human creativity, rather than diminishing it.

Chapter 2: The Digital Studio – DAWs as Your Creative Command Center

For any contemporary rock musician or producer, the Digital Audio Workstation (DAW) serves as the indispensable nerve center of their creative operations. It is within this powerful software environment that nascent musical ideas are captured, meticulously shaped, and ultimately brought to vibrant life. Industry-standard DAWs such as Pro Tools, Logic Pro X, Cubase, Ableton Live, and Studio One offer comprehensive suites of tools that cater to every stage of modern music production. These platforms are the engine that drives the creative process, enabling recording, editing, mixing, and mastering with unparalleled precision and creative freedom.

DAWs excel in facilitating multi-track recording, a cornerstone of contemporary music production. This capability allows individual instruments—be it drums, guitars, bass, or vocals—to be captured on separate tracks. The inherent non-destructive editing features mean that artists can experiment freely, correct performance imperfections without the need for entire re-takes, and fine-tune every subtle nuance of a recording. Beyond basic edits, advanced tools within DAWs, such as audio quantization for rhythmic precision, pitch correction for vocal perfection, and time-stretching for manipulating audio duration, offer an extraordinary level of control over recorded material. This granular control is particularly vital for the layered and often intricate arrangements characteristic of rock music.

Beyond the initial recording and editing phases, DAWs are equipped with robust mixing and mastering capabilities. These tools are crucial for shaping the overall sonic landscape of a track. Equalizers allow for precise sculpting of frequency content, ensuring each instrument occupies its own sonic space and contributes to a balanced mix. Compressors manage dynamic range, making sure that volume levels remain consistent and impactful. Reverb and delay plugins add spatial depth and atmosphere, transforming a dry recording into an immersive sonic experience. These built-in and third-party tools are essential for achieving a polished, professional sound that is ready for release. The ability to precisely control these elements ensures that rock music retains its characteristic punch, clarity, and emotional impact.

While rock music traditionally relies heavily on live instrumentation, modern DAWs seamlessly integrate virtual instruments (VIs). These software-based instruments are capable of emulating a vast array of sounds, from classic synthesizers and drum machines to full orchestral libraries. This expands a musician's sonic palette dramatically, eliminating the need to purchase and house extensive physical hardware. The versatility offered by virtual instruments empowers rock musicians to experiment with sounds beyond conventional rock instrumentation or to complement their live recordings with unique textures, fostering greater creative freedom and sonic exploration.

The very essence of rock music is often defined by its driving rhythms, memorable guitar riffs, and solid basslines. DAWs provide the ideal environment for meticulously crafting these foundational elements. Musicians can program intricate drum patterns using built-in sequencers or virtual drum machines, sequence MIDI data for bass and guitar parts, or record live performances and then fine-tune them with surgical precision. This level of control allows for the tight, impactful sound that is a hallmark of rock, which frequently relies on a strong, repetitive backbeat and powerfully distorted guitars. The ability to manipulate these core components with such detail ensures the genre's characteristic energy and cohesion.

The widespread adoption of Digital Audio Workstations has profoundly transformed the music industry by democratizing professional production capabilities. DAWs have effectively replaced the expensive, traditional tape-based recording studios, offering an accessible and versatile means of creating music. This shift has significantly lowered the barriers to entry for high-quality music production, making it affordable and available to individual artists who can now establish fully functional studios in their own homes. This democratization has far-reaching implications, shifting power dynamics away from large studios and record labels towards independent artists, thereby fostering a more diverse and self-reliant artistic landscape. The ability to craft professional-sounding tracks without the need for costly equipment fundamentally changes who can create and distribute music, leading to an explosion of new content. However, this also presents the challenge of market oversaturation, where artists must strive to distinguish their work in an increasingly crowded digital environment. This historical trend of democratization, initiated by DAWs, sets the stage for AI's even greater potential to empower individual creators, while simultaneously highlighting the growing importance of discoverability and differentiation in a saturated market.

Chapter 4: The Art of the Blend – Weaving Human Soul with Algorithmic Precision

The true magic in contemporary music production unfolds when human creativity and AI capabilities are seamlessly blended. This approach is not about one replacing the other, but rather about fostering a synergy where the distinct strengths of both are leveraged to produce something greater than either could achieve in isolation.

One effective strategy involves using AI as a dynamic starting point. Musicians can prompt AI to generate initial ideas—be it a compelling chord progression, a driving drum loop, or a captivating melodic motif. Human artists can then take these algorithmic "seeds" and develop them further, infusing their unique touch through live instrumentation, spontaneous improvisation, and emotionally charged performances. This collaborative method proves highly effective in overcoming creative blocks and provides a robust foundation upon which to build a complete song. By positioning AI as a creative spark, it helps artists rapidly generate diverse musical ideas, which human ingenuity can then elaborate upon.

Another powerful integration method involves recording human performances over AI-generated instrumental tracks. This ensures that the "soul" and "lived experience" that only human performance can impart are central to the composition. For rock music, where raw, human-driven performance is highly valued, this approach is particularly effective. Artists can write lyrics and then record their own vocals, guitar riffs, or drum fills over an instrumental track provided by AI, benefiting from the machine's efficiency in building a solid, perfectly timed foundation. This direct integration is crucial for maintaining human authorship and infusing the music with genuine emotional depth and unique character, effectively combining AI's precision with human expressiveness.

Beyond initial generation, AI tools can be invaluable for refinement and enhancement during post-production. After recording human parts, AI can be utilized for sophisticated mixing, mastering, or even subtle vocal transformation to inject additional emotion and texture. AI algorithms can analyze existing tracks, offer detailed feedback, or apply professional-grade processing, ensuring a polished, broadcast-ready sound. This demonstrates AI's role in moving beyond mere generation to become a powerful post-production assistant, enhancing human-created elements with precision and efficiency, and ultimately allowing artists to achieve a higher quality output.

A central concern for many artists is the preservation of the human touch within technologically assisted music. Rock music, in particular, thrives on raw emotion, unbridled energy, and the subtle imperfections that lend a performance its unique character. While AI can mimic emotional tones through pattern recognition and data analysis, it fundamentally lacks the "lived experiences" and "personal stories" that imbue human music with true depth and resonance. The lyrical content and overall narrative, therefore, must remain human-driven to maintain the song's intrinsic soul. This addresses the critical qualitative aspect of music—the human emotional connection—which AI cannot fully replicate, emphasizing that the narrative and emotional core must remain under human direction.

To ensure artistic integrity, the human creator must always remain in control, making the final artistic decisions. This involves thoughtfully curating AI outputs, meticulously refining them, and ensuring they align perfectly with the intended emotional impact and overarching artistic vision. It is an iterative process of generation and evaluation, where the artist's agency and intention guide the machine, ensuring that the music truly reflects their creative will. This concept of the "human in the loop" is paramount, ensuring that AI serves the artist's vision rather than dictating it, thereby preserving artistic integrity and control.

AI significantly accelerates the creative workflow, enabling rapid prototyping and extensive experimentation. Musicians can quickly generate multiple variations of a melody, rhythm, or arrangement, test different ideas, and iterate on them much faster than traditional methods. This newfound freedom to explore new musical landscapes without extensive manual effort can lead to unexpected and innovative results, fostering a more dynamic and less constrained creative process. This highlights AI's role in accelerating the creative cycle, enabling more exploration and refinement, and fostering a truly collaborative dynamic between human and machine.

The integration of AI into music production is fundamentally reshaping the definition of "musical skill" and "craft." While traditional instrumental proficiency and deep music theory knowledge remain invaluable, the emerging "craft" increasingly involves effective prompt engineering, discerning curation of AI outputs, and the ability to seamlessly blend human and machine-generated elements to achieve a desired emotional and artistic outcome. This implies a shift where the creative value lies not solely in the manual execution of music, but also in the intelligent guidance and artistic direction of an AI system. The "magic" now resides not just in playing an instrument, but in skillfully directing an intelligent system to augment one's vision, making artistic direction and curation paramount. This evolution challenges traditional notions of artistic merit and expertise, suggesting that music education and professional development will need to adapt to include AI literacy and human-AI collaborative skills, fostering musicians who are resilient and capable in a technologically advanced industry.

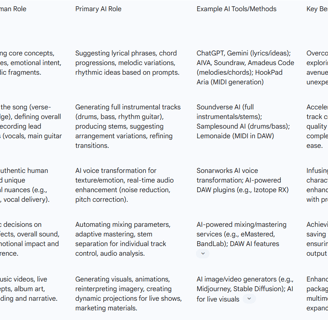

Table 1: Human-AI Collaboration Stages in Rock Production

sadasdasdasdasdsdasd

Chapter 3: Your AI Bandmate – Igniting Creativity with Intelligent Tools

For songwriters, the dreaded phenomenon of writer's block is a universal challenge. Here, Artificial Intelligence can step in as an incredibly powerful brainstorming partner, offering assistance in generating lyrical content ranging from single phrases to complete verses. By providing AI tools with themes, moods, or specific topics, lyricists can expand their vocabulary, introduce fresh imagery, and even refine rhyme schemes and meter. Imagine the creative spark ignited by prompting an AI to "write a verse in the style of Bob Dylan about social change" to kickstart your next protest anthem. This demonstrates AI's utility in the often challenging initial phase of lyric writing, highlighting its role as a creative assistant that can help overcome mental blocks and explore new narrative directions.

AI music generators are rapidly advancing in their ability to understand and replicate complex musical patterns and styles. Tools such as AIVA, Soundraw, Amadeus Code, and Google MusicLM can generate original melodies, harmonies, and even entire compositions based on user input for mood, style, and genre, including the diverse subgenres of rock. These intelligent systems can suggest catchy hooks or explore advanced harmonic structures, providing a vast array of musical ideas that musicians can then build upon and refine. The direct capability of AI in generating core musical elements like melodies and harmonies is crucial for rock's appeal and structural integrity, offering a rapid starting point for musical ideas.

The driving force behind any great rock song is its rhythm section. AI tools are now capable of crafting intricate drum patterns and rich, full-bodied basslines. Unlike traditional manual programming, AI-driven solutions analyze genre-specific patterns to create original, appropriate rhythms. This means musicians can quickly generate foundational beats, experiment with different rock styles—from classic rock to punk and metal—and then utilize stem separation features to meticulously tweak individual elements for that perfect punch and groove. This capability highlights AI's proficiency in handling complex rhythmic elements, which are fundamental to the dynamic and driving nature of rock music, offering both efficiency and creative flexibility in building a robust rhythmic foundation.

Modern AI music generators can produce full instrumental tracks, which can then be deconstructed into individual stems (e.g., vocals, drums, guitars, bass) using AI-powered stem splitter software. This functionality allows musicians to take an AI-generated foundation and then layer their own live performances, refine specific parts, or even completely re-arrange sections. This modular approach offers incredible flexibility for hybrid human-AI productions, ensuring that human creative control remains paramount over the final output. This practical workflow illustrates how AI can provide raw musical material (stems) that humans then sculpt and refine, underscoring the collaborative aspect and the ability to maintain creative direction.

While the emergence of fully AI-generated "bands" like The Velvet Sundown has sparked considerable debate regarding authenticity , many established artists and producers are discreetly integrating AI into various aspects of their workflows. Bands such as Ice Nine Kills and Bring Me The Horizon are leveraging AI for visually striking promotional materials and dynamic live show visuals. Even legendary acts like Guns N' Roses could potentially utilize AI to reinterpret classic imagery for anniversary editions or new releases. The true power of AI in the music industry lies in its capacity as a "multi-purpose tool for studying, learning and in the creative process". These examples demonstrate that AI's utility extends beyond niche electronic artists, finding adoption even among mainstream rock acts, often for non-musical aspects or with less public disclosure, highlighting its growing versatility across various creative domains within the music industry.

There is an observed commercial incentive for artists and labels to utilize AI while simultaneously preserving an image of purely human creativity, especially given public skepticism surrounding AI-generated art. This situation suggests an emerging trend of "ambiguous interaction" or a "hidden hand" of AI in mainstream music production. Artists might extensively employ AI for brainstorming, generating musical parts, mixing, or mastering , yet publicly emphasize the "human" element to maintain authenticity and market appeal. This creates a notable disconnect between the actual production workflow and public perception, making it challenging for listeners to discern the true extent of AI's influence. The controversy surrounding The Velvet Sundown, where the band's AI-generated visuals contradicted their claims of purely human creation, serves as a prime example of this tension. This situation raises important questions about transparency in the music industry, the evolving definition of "authenticity" in the digital age, and the potential for consumer perception to be shaped more by marketing narratives than by the actual creative process.

Chapter 5: Copyright in the AI Era – Protecting Your Masterpiece

For musicians venturing into AI-assisted creation, understanding copyright is paramount. The U.S. Copyright Office has clearly stated that works generated solely by AI, without sufficient human creative input, are not eligible for copyright protection. This means that a song created entirely by an AI music platform, where a human simply provides a text prompt and selects an output, cannot be exclusively owned or protected under copyright law. This fundamental legal principle is crucial for anyone considering AI in music production. Furthermore, simple text prompts are considered "ideas" rather than "expressive creations," and as such, they do not qualify for copyright, establishing a clear threshold for what constitutes creative input.

The pathway to copyrightability in the AI era hinges on demonstrating "sufficient human expression" or "meaningful human authorship." This necessitates that the human creator plays an "essential role in the creative process" and that their creative input is "evident and substantial". This goes beyond merely providing prompts or selecting from AI-generated outputs; it demands active shaping, modification, and artistic direction.

Examples of what constitutes sufficient human input for copyright eligibility include:

Writing original lyrics and thoughtfully pairing them with AI-generated instrumentals.

Manually adjusting the composition, tempo, or arrangement of a track after AI has provided initial assistance.

Recording live vocals or instruments over an AI-generated backing track, thereby adding unique human performance elements.

Engaging in the mixing and mastering of AI-generated stems, blending them with human-created sounds to achieve a cohesive and artistically directed final product.

Modifying or arranging AI-generated content in a "sufficiently creative way" that demonstrates human artistic choices.

When a human contributes their own copyrightable work, and that work is discernible in the final output, they are considered the author of at least that portion of the creation. These examples provide concrete, actionable steps musicians can take to ensure their work meets the human authorship requirement, making the legal concept tangible and applicable.

Given the dynamic nature of this legal landscape, it is imperative for musicians to proactively document their creative involvement. Maintaining detailed records of all modifications, iterations, and specific human-driven decisions made throughout the production process is crucial. This comprehensive documentation can serve as invaluable evidence of authorship should questions of ownership arise, providing a clear paper trail of the human creative input. This practical advice is essential for protecting intellectual property in the AI era, emphasizing the importance of a clear record of human creative contributions.

The copyright framework for AI-assisted music often aligns with existing rules for "derivative works"—original works created upon or adapted from pre-existing material. In such scenarios, only the new, original aspects contributed by the human creator can be copyrighted. For instance, if an AI generates individual sounds, but a human creatively arranges them into a unique composition, the arrangement itself might be copyrightable, while the individual AI-generated sounds may not be. This distinction is vital for understanding the precise scope of legal protection.

The legal landscape surrounding AI and copyright is still very much in development, with ongoing debates and significant lawsuits shaping its future. The U.S. Copyright Office is actively examining these issues and regularly issuing guidance. Musicians must stay informed about these evolving policies, as they will continue to impact the industry. The core principle, however, remains steadfast: human creativity must be at the very heart of the work for it to qualify for copyright protection. This emphasizes the real-world risks and the critical importance of adhering to the human authorship requirement, highlighting the need for vigilance and adaptation.

The emergence of AI exacerbates a long-standing philosophical challenge known as the "originality paradox." Copyright law mandates "originality" and "human authorship". Yet, it is widely acknowledged that "all music is, in some way, derivative of other music". AI systems learn by analyzing "vast datasets of musical pieces" , a process that is strikingly similar to how human artists develop their styles and learn their craft. This creates a tension between the legal requirement for "originality" and the inherently derivative nature of all creative work, whether human or algorithmic. The legal distinction currently hinges on who makes the "creative choices" and who possesses the "creative spark." This implies that the legal framework is grappling with the very definition of "creativity" in a landscape that extends beyond purely human-centric artistic production, necessitating a re-evaluation of fundamental concepts. In this context, the "human in the loop" becomes not merely a creative choice but a legal imperative to bridge this philosophical gap. This suggests that future legal debates may delve deeper into the philosophical definitions of creativity and consciousness, rather than focusing solely on the output. It also implies that AI's rapid advancement could compel a re-evaluation of copyright law's foundational tenets, as the line between "inspiration" and "copying" becomes increasingly blurred for algorithmic systems, potentially leading to new legal precedents.

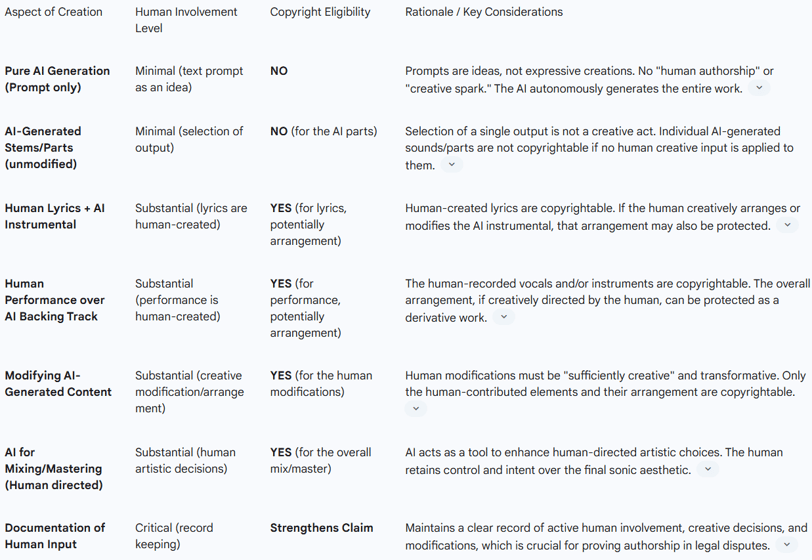

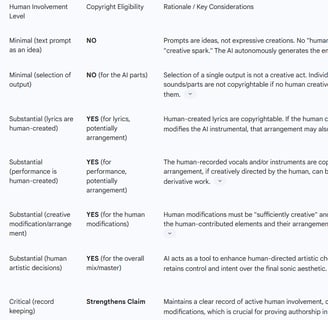

Table 2: Copyright Checklist for AI-Assisted Music

Conclusion: The Future is a Human-AI Symphony

The journey through the evolving landscape of music technology makes one thing abundantly clear: Artificial Intelligence is not a threat to human creativity in rock music, but an extraordinary amplifier. From sparking lyrical ideas to generating intricate instrumentals and refining the final mix, AI tools offer unprecedented opportunities for efficiency, experimentation, and pushing artistic boundaries. It is about embracing a new era of co-creation, where the artist remains the visionary, wielding powerful algorithms as extensions of their creative will.

While AI can mimic patterns and generate technically proficient music, the true "soul" and emotional resonance of a rock song still stem from human experience, vulnerability, and intent. The raw energy of a guitar riff, the heartfelt delivery of a lyric, the subtle imperfections that give a track its unique character—these are the indelible marks of human authorship. It is the human artist who infuses the music with meaning, who connects with listeners on a visceral level, and who ultimately defines the artistic vision that AI tools merely help to realize.

The future of rock music production is poised to become a thrilling human-AI symphony. By understanding the capabilities of AI, navigating the copyright landscape wisely, and always prioritizing one's unique artistic voice, musicians can unlock new dimensions of creativity. The call is not merely to adapt to this technological wave, but to ride it, shape it, and allow it to propel rock music to unprecedented heights. The stage is set for the next generation of rock anthems—powered by human heart and AI mind. The concept of "authenticity" in music is increasingly tied to the provenance—the human origin and "soul"—rather than solely the auditory experience or its immediate emotional effect. This suggests that as AI-generated music becomes indistinguishable from human-made music in terms of sound quality and even emotional mimicry, the perceived value might shift to the narrative surrounding its creation, emphasizing the human story behind the sound. This dynamic will continue to shape how music is created, consumed, and valued in the years to come.

United Strong - BTS rocks

Get ready to headbang with BTS rocks! Our new music video for "United Strong" is a powerful rock anthem celebrating the incredible teamwork and unbreakable bond of the IT department (Software Development, IT Operations, IT Administration) at CHG in Weingarten, Germany. See how these IT pros turn off their screens, crank up the amps, and pay tribute to the power of collaboration and camaraderie. Hit play, feel the energy, and rock on with "United Strong"!

Innovate

Forged by Heart, Bytes meet Beats.

Explore

Rock

more to come...

© 2025. All rights reserved.

About Our Content Creation:

Our content features altered or synthetically generated sound and visuals.

All elements have been produced with significant manual editing and

dedicated human effort.